Elon Musk’s Department of Government Efficiency (DOGE) team is pushing Grok, a custom AI chatbot built by xAI, into U.S. federal agencies to unlock faster data insights. Three sources say the move—and the way it’s rolling out—has privacy advocates and ethics experts sounding the alarm.

Riding the AI Wave in Government

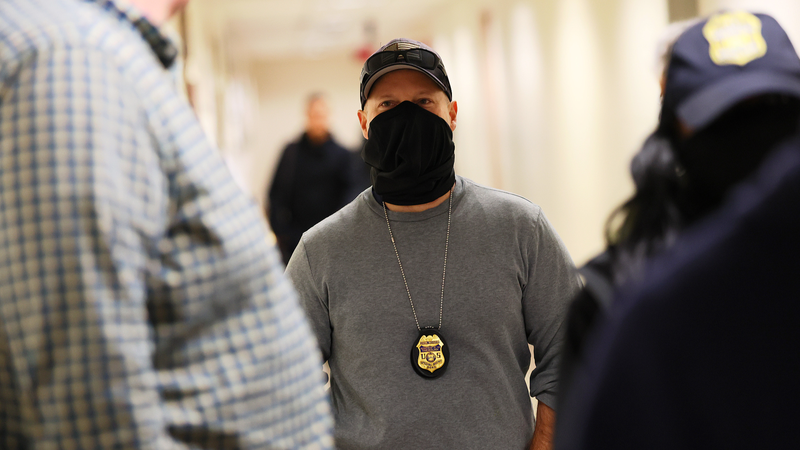

Launched on Musk’s social platform X in 2023, Grok was designed to sift through large datasets on demand. Now, DOGE staff are reportedly asking teams at the Department of Homeland Security and beyond to test-drive Grok for everything from report prep to deep analytics—even though it hasn’t completed internal security checks.

Risk vs. Reward

Five specialists in technology ethics warn that feeding sensitive or non-public federal records into an unvetted AI tool could run afoul of privacy laws and conflict-of-interest regulations. Musk’s ventures, including Tesla and SpaceX, hold lucrative government contracts—raising questions about whether this deployment could duplicate or inform proprietary AI training.

Stakes for Citizens and Policy

Critics point out that a private AI system operating on federal data may bypass long-standing safeguards around how agencies manage citizens’ information. “They ask questions, get it to prepare reports, give data analysis,” says one insider—echoing concerns over accountability, oversight, and data security on a national scale.

Official Pushback

Neither Musk nor the White House responded to requests for comment. A DHS spokesperson insisted that DOGE “hasn’t pushed any employees to use any particular tools or products,” adding that the team’s mission is to “find and fight waste, fraud and abuse.”

As governments worldwide balance innovation with regulation, Musk’s experimental rollout of Grok may well become a case study in public-sector AI adoption—and a cautionary tale about keeping sensitive data under watchful guard.

Reference(s):

Musk's DOGE expanding his Grok AI in U.S. government, raising concerns

cgtn.com